12 December 2022

Deepfakes and brand protection: Deepfake refers to facial and voice recognition technology that enables people to impersonate, basically, anyone. As the technology behind fake videos and audio improves and become more accessible, brands face the risks of:

- their most valuable assets being hijacked by digital imposters.

- being scammed by bad actors.

- their customers being scammed.

Deepfake is a new, highly deceptive AI technology that enables malicious users to create accurate, real-time fake videos and audio of anyone. Deepfake technology has been used by criminals and troublemakers alike in recent years. This technology can be deployed on both the darkweb and over the internet, bypassing traditional forms of detection or counter-measures.

A deepfake video is not only faked but can be indistinguishable from the original video, resulting in high-impact impersonation scams and smear campaigns that could damage organizations’ reputations and profits. The potential risk posed by deepfakes has security implications for everyone.

Actor Miles Fisher appears on the left, while the deepfake depicting him as Tom Cruise is on the right. Chris Um

Deepfake technology convergence

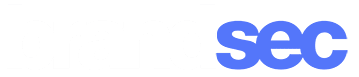

While there is entertainment value in deep fakes, there is a scary & sinister side to them especially when multiple AI technologies converge. Combining powerful techniques from machine learning and artificial intelligence users can manipulate or generate visual and audio content that can easily deceive end users. The main machine learning methods used to create deepfakes are based on deep learning and involve training generative neural network architectures, such as autoencoders or generative adversarial networks (GANs). Deepfake technology is now easily accessible to anyone with an internet connection through apps such as Zao, deepfakesweb.com and many more.

Voice audio deep fake technology is becoming particularly scary. It is basically cloning someone’s voice either via text-to-speech or speech-to-speech, which is like voice skinning (which is when someone else layers a voice on top of their own in real-time).

New techniques in AI dialogue engagement such as ChatGPT could add rocket-fuel to the effectiveness of audio deep fakes. ChatGPT software application is designed to mimic human-like conversation based on user prompts while harnessing the depths of online knowledge and unfathomable computing power to perform written tasks. It basically creates an intelligent prose/narrative on any topic of interest. This adds an automated intelligent conversation to the fake voice and video that is really scary.

Deepfake technology is accessible by anyone with an internet connection. Source: deepfakesweb.com

U.K. energy firm Scammed through the use of AI

Criminals used Deepfake to impersonate a UK energy firms chief executive’s voice and successfully engineered the transfer of €220,000 to an offshore bank account.

The CEO of the U.K. energy firm believed that he was speaking with his boss on the phone who requested that he send funds to their Hungarian supplier. In fact, the voice belonged to a fraudster who use AI voice technology to spoof the German chief executive. It was reported that the CEO recognized the subtle German accent in his boss’s voice—and that it even carried the man’s “melody.”

Binance CMO Targeted by Deep Fake Attacks

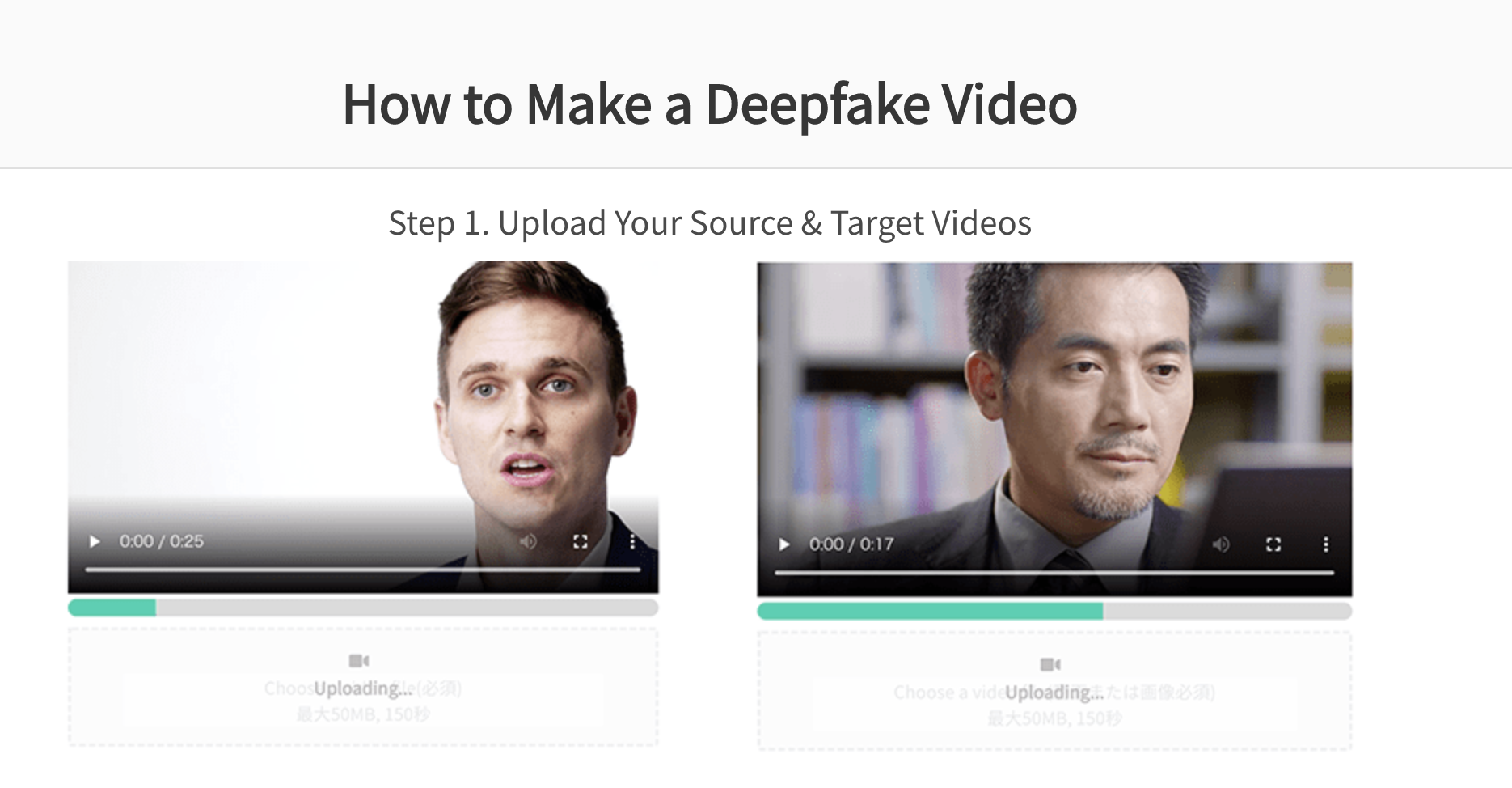

Despite having led one of the world’s largest cybersecurity teams and managed some of the largest data breaches in history (US OPM, Ashley Madison, etc.), Patrick Hillmann, Chief Communications Officer at Binance claimed that he had been Deep fakes to trick prospective customers.

He received several online messages from people he hadn’t met, thanking him for taking the time to meet with project teams regarding potential opportunities to list their assets on Binance.com.

It turns out that sophisticated cybercriminals constructed a deep fake of Hillman through news interviews and TV appearances over the years, which was refined enough to fool several highly intelligent crypto community members.

The moment the Binance CMO discovered that he was being deepfaked

Brand Protection and Deepfakes: Monitoring

There is a lot of academic and commercial research into the best methods to detect deepfake videos. Traditional brand protection agencies use alt-tag methods to find associated keywords in the metadata, but this is easily gamed. The more exciting approach to deepfake detection is to use algorithms to recognize patterns and pick up subtle inconsistencies that arise in deepfake videos. However, this is likely to a technology game of chess as brand protection agencies and bad actors attempt to out maneuver each other.

The effectiveness of current detection technology is not honed yet. Recently a coalition of leading technology companies hosted the Deepfake Detection Challenge to accelerate the technology for identifying manipulated content. The winning model of the Deepfake Detection Challenge was 65% accurate on the holdout set of 4,000 videos.

Microsoft released a product called a video authenticator tool that analyzes videos and images, which generates a confidence score to help consumers and brands determine whether they are real or are in fact a deepfake.

The video authenticator tool works by using Artificial Intelligence to detect signs that it is fake, which might be invisible to the human eye. Examples of these tell-tale signs include grayscale pixels at the boundary of where the artificially generated component appears. Microsoft used a public dataset of deepfake videos and tested it against an even bigger dataset that Facebook had created in its attempt to understand deepfakes.

Similarly, Facebook used Visual Artificial Intelligence to build a database by which their own algorithm can learn to detect fake videos and other social media giants built similar programs.

Microsoft’s video authenticator tool

Brands must stay vigilant

While detection technology is playing catch up, it is important for brands to at least be aware of the Deepfake threat, and not to take unusual requests by ‘trusted’ people at face value (pun intended).

Businesses can educate their employees on how to identify and prevent deepfake attacks, including recognising the signs of a deepfake video and being wary of suspicious requests or messages. Maintain a healthy suspicion for anyone requesting an urgent payment and offering new bank information – even if it is seemingly your boss.

About brandsec

brandsec is a corporate domain name management and brand protection company that looks after many of Australia, New Zealand and Asia’s top publicly listed brands. We provide monitoring and enforcement services, DNS, SSL Management, domain name brokerage and dispute management and brand security consultation services.